Anvil enters year four of production

Anvil, one of Purdue’s most powerful supercomputers, continues its pursuit of excellence in HPC as it enters its fourth year of operations. Funded by a $10 million acquisition grant from the National Science Foundation (NSF), Anvil began early user operations in November 2021 and entered production operations in February 2022. After three years online, Anvil has more than proven its value. The supercomputer has been used to help over 12,000 researchers push the boundaries of scientific exploration in a variety of fields, including artificial intelligence, astrophysics, climatology, and nanotechnology. This past year was also marked by an explosion of growth for Anvil, both in machine size and usage statistics. Thanks to supplemental funding from the NSF’s National Artificial Intelligence Research Resource (NAIRR) Pilot, the Anvil AI partition was added to the supercomputer and brought online. A total of 84 Nvidia H100 SXM GPUs were procured and added to the system. With this upgrade, Anvil is now poised to deliver a world-class AI supercomputing resource to researchers nationwide.

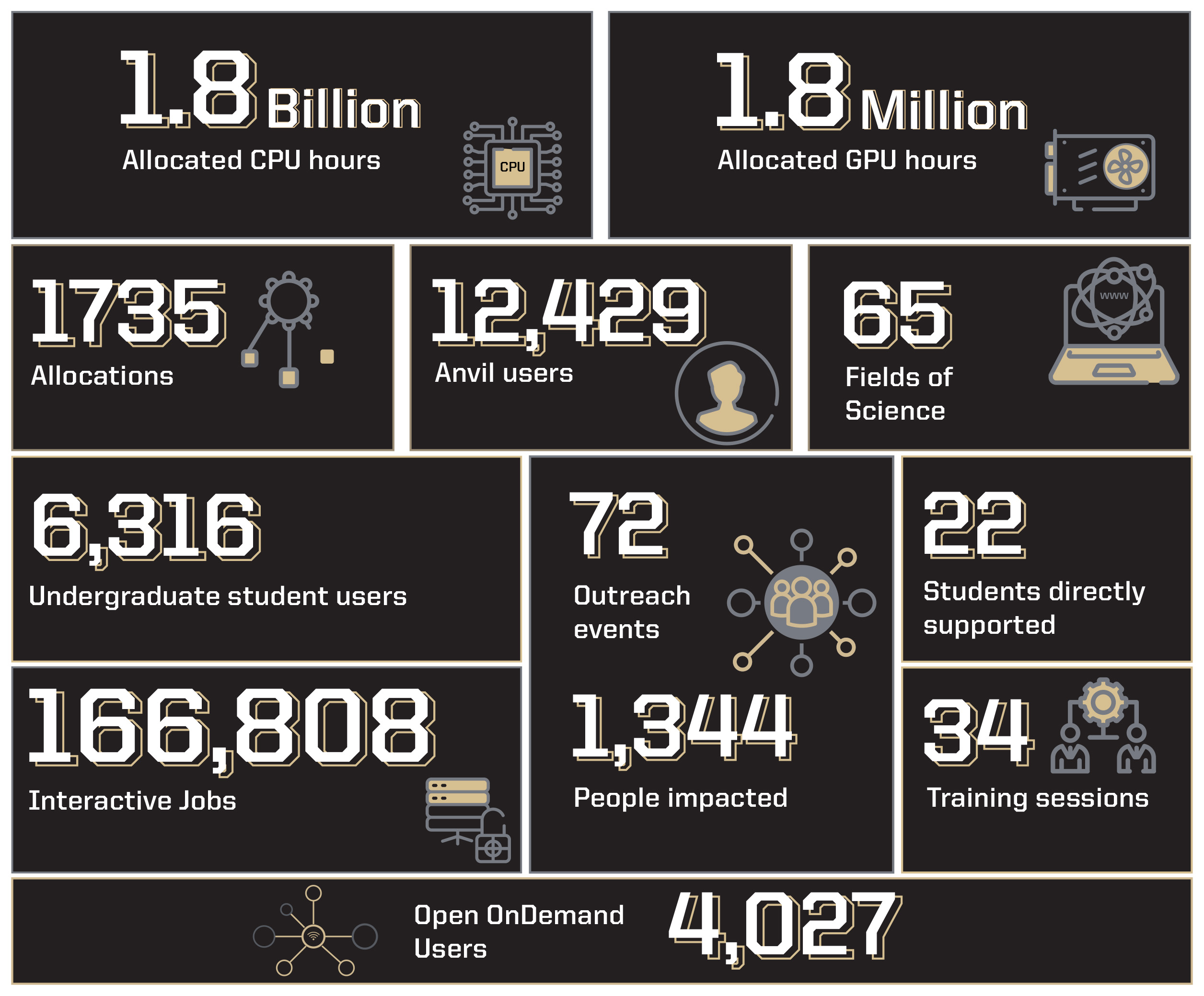

Anvil at a Glance—Three Years of Operations

Over the past three years, Anvil has had a significant impact on scientific research and student development. With more than 12,000 total users thus far (double the number from its second year of operations), of which over 6,000 were undergraduate students (another twofold increase), Anvil is not only helping meet the growing need for high-performance computing (HPC) within the realms of research, but also actively assisting with the development of cyberinfrastructure professionals of tomorrow. Overall, Anvil has allowed users access to 1.8 billion CPU hours and 1.8 million GPU hours, supporting research across 65 diverse scientific domains. In 2024 alone, 165 research publications (a ~2.3x increase from 2023) cited Anvil usage. Aside from the supercomputer itself, the Anvil team has been hard at work promoting the benefits of HPC and ensuring the nation has a workforce trained in the use, operation, and support of advanced cyberinfrastructure. In its three years of operations, the Anvil team has participated in 72 outreach events and conducted 34 training sessions, with a multitude already planned for the coming year. These training sessions are designed to deliver working knowledge of HPC systems and teach users how to get the most out of their research time on Anvil. The team also provided hands-on training to students through initiatives such as the Anvil Summer REU program and RCAC’s CI-XP student program, which allowed the students to gain much-needed knowledge and experience in the field of HPC.

Anvil Tech Specs

Anvil is a supercomputer  deployed by Purdue’s Rosen Center for Advanced Computing (RCAC) in partnership with Dell and AMD. The system was created to significantly increase the computing capacity available to users of the NSF’s Advanced Cyberinfrastructure Coordination Ecosystem: Services and Support (ACCESS), a program that serves tens of thousands of researchers across the United States. Before the new expansion, Anvil’s system consisted of 1,000 Dell compute nodes, each with two 64-core third-generation AMD EPYC processors, 32 large memory nodes with 1 TB of RAM per node, and 16 GPU nodes, each with four NVIDIA A100 Tensor Core GPUs, all of which are interconnected with 100 Gbps Nvidia Quantum HDR Infiniband. The new NSF NAIRR funding has added 21 Dell PowerEdge XE9640 compute nodes, each with 4 Nvidia 80GB H100 SXM GPUs, as well as an additional 1 PB of flash-based object storage integrated into Anvil’s composable subsystem. The new GPU nodes also feature an additional NDR Infiniband fabric to support larger AI workloads.

deployed by Purdue’s Rosen Center for Advanced Computing (RCAC) in partnership with Dell and AMD. The system was created to significantly increase the computing capacity available to users of the NSF’s Advanced Cyberinfrastructure Coordination Ecosystem: Services and Support (ACCESS), a program that serves tens of thousands of researchers across the United States. Before the new expansion, Anvil’s system consisted of 1,000 Dell compute nodes, each with two 64-core third-generation AMD EPYC processors, 32 large memory nodes with 1 TB of RAM per node, and 16 GPU nodes, each with four NVIDIA A100 Tensor Core GPUs, all of which are interconnected with 100 Gbps Nvidia Quantum HDR Infiniband. The new NSF NAIRR funding has added 21 Dell PowerEdge XE9640 compute nodes, each with 4 Nvidia 80GB H100 SXM GPUs, as well as an additional 1 PB of flash-based object storage integrated into Anvil’s composable subsystem. The new GPU nodes also feature an additional NDR Infiniband fabric to support larger AI workloads.

“Anvil joined the NAIRR Pilot as a resource provider in May of 2024” says Rosen Center Chief Scientist Carol Song, principal investigator and project director for Anvil. “We made available Anvil’s discretionary capacity, which was allocated entirely to researchers, right away. This H100 GPU expansion not only gives Anvil a significant boost to the amount of resources available to the NAIRR Pilot users, but also provides a major increase in Anvil’s GPU computing power. The H100 GPU outperforms the current A100 GPU in Anvil by as much as nine times in computing speed. Many workloads, especially AI model training and inference, will run much faster, reducing the time-to-results for researchers.”

In 2024, GPU capabilities were upgraded for the Anvil Composable Subsystem of the Anvil supercomputer. The Anvil Composable Subsystem now hosts eight composable nodes, each with 64 cores and 512 GB of RAM, and multiple GPU nodes with a total of 4 NVIDIA A100 80GB GPUs and 4 NVIDIA H100 96GB GPUs. The Anvil Composable Subsystem is a Kubernetes-based private cloud managed with Rancher that provides a platform for creating composable infrastructure on demand. This cloud-style flexibility allows researchers to self-deploy and manage persistent services to complement HPC workflows and run container-based data analysis tools and applications. The composable subsystem is intended for non-traditional workloads, such as science gateways and databases, and the addition of the composable GPU node supports tasks such as AI inference services and model hosting.

Anvil Innovations

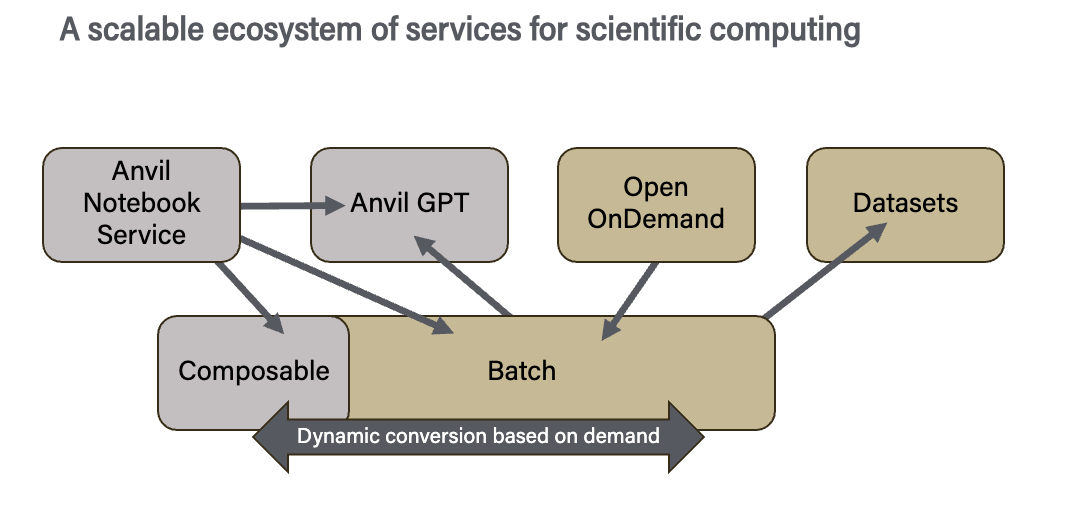

The Anvil supercomputer has been host to a number of innovations throughout the past year. From on-premises generative AI, to a Jupyter Notebook platform, to increased datasets and a streamlined, user-friendly dashboard, the Anvil team has strived to provide researchers with the best cutting-edge tools to help advance their work. These innovations include:

AnvilGPT: AnvilGPT is a large language model (LLM) service that makes open-source LLM models like LLaMA accessible worldwide to ACCESS researchers. Unlike other LLM services, AnvilGPT is hosted entirely with on-premises (on-prem) resources at Purdue. This means researchers have more democratized access to LLMs, as well as more control. AnvilGPT is hosted on the Anvil Composable Subsystem and leverages the powerful H100 GPUs for rapid processing. The service was designed to provide a secure, central, and flexible AI platform tailored for Anvil users. Anyone with an Anvil allocation has access to AnvilGPT for free.

Anvil Notebook Service: The Anvil Notebook Service is a cloud-based, scalable platform for web-based Jupyter Notebooks. It offers access to CPU and GPU resources through a variety of Jupyter notebooks supporting Python, R, Julia and popular machine learning frameworks like Tensorflow and PyTorch. The notebook service is also tightly integrated with the Anvil HPC system, allowing users to interact with data stored on Anvil and submit jobs to Anvil's batch system.

Scaling Anvil Composable: With the addition of AnvilGPT and the Anvil Notebook Service, as well already hosting 12 Science Gateways with various scaling requirements, Anvil has seen an ever-increasing demand for Kubernetes infrastructure. To combat this heightened demand (which often exceeded the Kubernetes resource capacity), the Anvil team has developed an automated batch to Kubernetes conversion process. This process utilizes idle batch nodes on the Anvil HPC system to increase Kubernetes resources, which not only allows Kubernetes to perform at scale, but also maximizes the use of Anvil’s 1000+ node capacity. The ability to scale the composable system has already been used to great effect:

-

NanoHUB STARS Workshop

-NanoHUB staff integrated their hub with Anvil Composable to scale out tool sessions

-Supported 75 participants launching tool sessions with 4C and 16GB RAM

-

CyberFACES (NSF CyberTraining)

-Custom JupyterHUB supporting 100s of participants

-

Purdue DataMine (Anvil Notebook Service, 2025)

-1200+ students currently using Anvil batch to launch notebooks

Open OnDemand Dashboard: As part of their Anvil REU experience, undergraduate students Richie Tan and Anjali Rajesh developed an Anvil web dashboard to highlight Anvil usage metrics and make complex information more accessible to Anvil users. By creating this dashboard, Rajesh and Tan provided Anvil users the ability to effortlessly tap into relevant metrics that can help them understand how they are using their computational resources and how they can improve their performance without any coding or command-line confusion. The dashboard has been so successful that it has been shared with the OpenOnDemand project (which it was built on) for broader use. Some of its key features include:

- Homepage widgets showing service units, disk usage, queued jobs, etc.

- My Jobs page for a comprehensive view of recent jobs on Anvil.

- Performance Metrics page for job performance summary over specific periods of time.

- In-memory caching for API requests.

Datasets: The Anvil team has incorporated popular domain-specific datasets onto the system to optimize user workflows. A module system enables searching by dataset category; e.g. hydrological models, geospatial models, etc. The team also included automatic web-based documentation generation for future discoverability and search function. Perhaps the biggest innovation within the datasets is the conversational search made available through the dataset query. This enables a context-sensitive chat function that summarizes information from various dataset documents and works across multiple domains.

Enabling science through advanced computing

Thanks to its configuration and cutting-edge hardware, Anvil is one of the most powerful academic supercomputers in the US. When it debuted, the Anvil supercomputer was listed as number 143 on the Top500 list of the world’s most powerful supercomputers. Anvil’s advanced processing speed and power has allowed researchers to save hours of time on computations and simulations, enabling innovative scientific research and discovery. The highlights given below are but a few of the hundreds of use-cases stemming from Anvil:

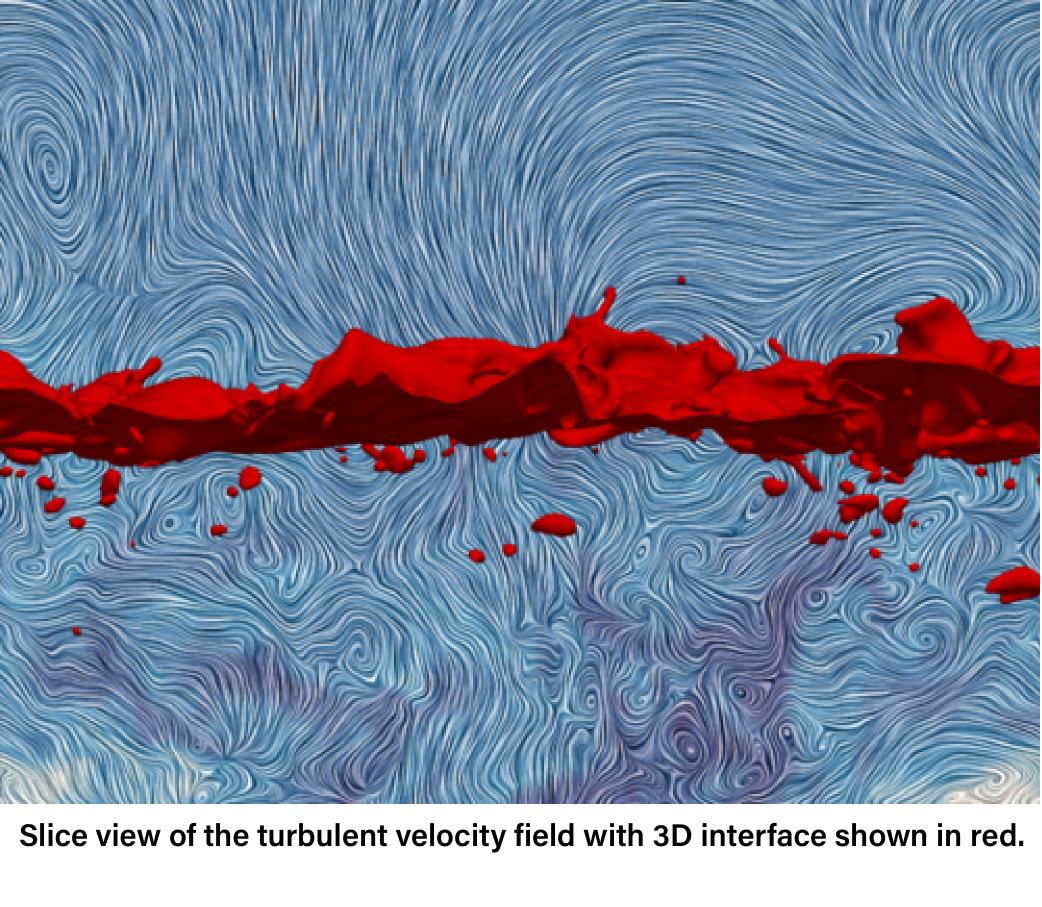

1) Researchers from the George Washington University used Purdue’s Anvil supercomputer to simulate fluid flows in order to elucidate the physics of turbulent bubble entrainment. Understanding this process will lead to practical applications in a variety of fields, including oceanography, naval engineering, and environmental science.

Andre Calado is  a Graduate Research Assistant at the George Washington University, working to complete his PhD in computational fluid dynamics. He, alongside his advisor Elias Balaras—a professor in the Department of Mechanical and Aerospace Engineering—wanted to advance the study of two-phase flows (air and water), specifically how turbulence underneath the water interacts with the water’s surface and the role this plays in air entrainment. The pair used Anvil to run direct numerical simulations in order to produce high-fidelity simulations of the physics of bubble entrainment. Their work has pushed the boundaries of what has been accomplished so far within two-phase flow research.

a Graduate Research Assistant at the George Washington University, working to complete his PhD in computational fluid dynamics. He, alongside his advisor Elias Balaras—a professor in the Department of Mechanical and Aerospace Engineering—wanted to advance the study of two-phase flows (air and water), specifically how turbulence underneath the water interacts with the water’s surface and the role this plays in air entrainment. The pair used Anvil to run direct numerical simulations in order to produce high-fidelity simulations of the physics of bubble entrainment. Their work has pushed the boundaries of what has been accomplished so far within two-phase flow research.

“We’re happy to be using Anvil to perform these calculations,” says Calado. “It has been very helpful. These are very large computations—we’re talking about thousands of cores at a time. So we need these resources to do the fundamental research in order to understand the physics, and then hopefully apply what we learn to more practical engineering calculations.”

2) Dirty air, or particle-laden flow, as it’s known in the hypersonics research world, can be extremely problematic for vehicles traveling at hypersonic speeds. Tiny particles, sub-micrometer in size, are deposited into the air via natural events, such as volcanic eruptions, ice clouds, and atmospheric dust, or through human-induced air pollution. These particles then impact the vehicle as it flies through the atmosphere. While it may seem that these particles are too small to be problematic, they can actually cause damage and increase the risk of functional failure. Dr. Qiong Liu, an Assistant Professor in the Department of Mechanical and Aerospace Engineering at New Mexico State University, along with Irmak Karpuzcu, Akhil Marayikkottu, and Deborah Levin, all from the Department of Aerospace Engineering at the University of Illinois, Urbana-Champaign, have used the Anvil supercomputer to elucidate exactly what happens when particles hit the surface of a hypersonic vehicle in flight.

“We are looking to understand the effects of tiny particle impact on the surface of hypersonic vehicles,” says Liu. “These high-speed vehicles usually have thermal protection around the surface to help with excessive heating, but repeated particle impact, even from such small particles, are going to cause damage to the thermal protection, which will cause significant troubles during flight.”

Using the direct simulation Monte Carlo (DSMC) method, the researchers studied the fundamental flow physics and particle trajectory in the flow field around a blunted cone, the most common forebody shape used in hypersonic flight. The simulations looked at particles ranging from .01 micrometers up to 2 micrometers in size. With these simulations, the group was able to determine both the effects that a single particle of varying sizes had on the bow shock and the statistical characteristics of those particles. They found that lighter particles (less than .02 micrometers) could not penetrate the bow shock wave, and so could never directly impact the vehicle. However, heavier particles (greater than .2 micrometers) passed through the bow shock, directly impacted the vehicle, ricocheted upstream, and then traveled downstream in the flow. This unique interaction and motion of heavier particles led to bow shock distortions.

With this study, the research team delivered some much-needed clarity regarding the physics of hypersonic flight, but it was only possible with help from supercomputing resources like Anvil. Liu was thrilled with Anvil’s performance throughout the project.

“We are really, really happy with computing on Anvi,” says Liu. “The code was well parallelized, so we had no problems running that. Also, the queue was very short, so we were able to submit jobs and get results very quickly. I’ve actually encouraged many of my new colleagues to apply to use Anvil because I had such a good experience.”

3) Researchers from the University of Wisconsin (UW)–Madison used Purdue’s Anvil supercomputer to study turbulence and turbulent transport in astrophysical plasmas. This research seeks to elucidate the fundamental physics of turbulence, which will have applications across the fields of fluid and plasma dynamics. The group not only pushed the boundaries of scientific research with their work, but also tested the performance limits of Anvil, utilizing upwards of half the machine (512 nodes at once) to run a single simulation.

Bindesh Tripathi, who spearheaded the project, is working toward finishing his doctoral dissertation in the Department of Physics at UW–Madison. Under the joint supervision of advisors Dr. Paul Terry and Dr. Ellen Zweibel, both of whom are professors at the university, Tripathi conducts research involving astrophysics and plasma physics, mathematical/theoretical physics, and numerical methods. Tripathi used Anvil to shed light on the underlying physics of stable-mode excitations within fluid and plasma dynamics, a little understood phenomenon that occurs at large (galactic) scales. To accomplish this task, Tripathi first had to make several bespoke changes to a 3-dimensional (3D) magnetohydrodynamics simulation software known as Dedalus. Then, in order to run the code successfully, the group needed access to an extraordinary amount of computing power, which the Anvil supercomputer was able to provide. To support the researchers’ work, the Anvil team set up a special allocation that allowed the group to utilize 512 nodes at once. The group routinely used 30,000 to 40,000 cores simultaneously. To be clear, this was a parallel code, so one single simulation required the use of all of the cores at the same time. This level of computation for a real-world research problem had not yet been tested on Anvil, but the computer was able to handle it with no issues. Tripathi’s code ran seamlessly, even at such a large scale, and he was thrilled with the performance of the system.

“I ran the Dedalus code, and I found it running beautifully well,” says Tripathi. “Anvil has a large number of cores, and the queue time was relatively short, even for the very large resources that I was requesting, and the jobs would run quite fast. So it was a quick turnaround, and I got the output pretty quickly. I have had to wait a week or even longer on other machines, so Anvil has been quite useful and easy to run the code. Anvil has also generously provided us with storage of a large dataset, which now amounts to 125,000 gigabytes from my turbulence simulations.”

Training and Education Impacts

Aside from enabling groundbreaking research across multiple fields of science, Anvil is being used as a tool to develop the future workforce in computing. From professional training and workshops to hands-on learning experiences for students, Anvil is helping to forge the next generation of researchers and cyberinfrastructure professionals.

Professional Training

One major training and  educational impact made by Anvil involved supporting the 2024 BigCARE Summer Workshop, which took place at the University of California, Irvine (UCI). The BigCare Workshop was a National Cancer Institute-funded biomedical data analysis workshop designed to train cancer researchers on how to visualize, analyze, manage, and integrate large amounts of data in cancer studies. This year’s workshop focused on analyzing and interpreting genomic and genetic data, including transcriptomic analyses, epigenomic analyses, genome-wide association analyses, and network analyses. Thanks to supplemental funding from National Institute of Allergy and Infectious Diseases (NIAID), the workshop also covered COVID and microbiome data analysis by introducing infectious and immune-mediated disease-related data sets, a first for BigCARE. Dr. Min Zhang, the principal investigator on the NCI-funded project, taught the workshop participants the skills needed to analyze their research data, while Anvil provided an HPC environment that had a very low barrier to entry, ensuring that non-HPC professionals could quickly and easily complete their research without having to become an expert in computing.

educational impact made by Anvil involved supporting the 2024 BigCARE Summer Workshop, which took place at the University of California, Irvine (UCI). The BigCare Workshop was a National Cancer Institute-funded biomedical data analysis workshop designed to train cancer researchers on how to visualize, analyze, manage, and integrate large amounts of data in cancer studies. This year’s workshop focused on analyzing and interpreting genomic and genetic data, including transcriptomic analyses, epigenomic analyses, genome-wide association analyses, and network analyses. Thanks to supplemental funding from National Institute of Allergy and Infectious Diseases (NIAID), the workshop also covered COVID and microbiome data analysis by introducing infectious and immune-mediated disease-related data sets, a first for BigCARE. Dr. Min Zhang, the principal investigator on the NCI-funded project, taught the workshop participants the skills needed to analyze their research data, while Anvil provided an HPC environment that had a very low barrier to entry, ensuring that non-HPC professionals could quickly and easily complete their research without having to become an expert in computing.

“During the previous big data workshops I organized,” says Zhang, “participants faced significant challenges as they had to navigate both the command line interface and the R programming environment, which often led to difficulties as most participants have limited computing skills. Anvil’s powerful computing capabilities allow participants to handle large-scale omics data more efficiently, making analysis of next-generation sequencing data more accessible.”

Anvil was so helpful for the workshop that Zhang intends to renew it as the resource for supporting BigCare for the foreseeable future. “We are pleased to announce that our R25 grant, ‘Big Data Training for Cancer Research,’ has been renewed by the National Cancer Institute for the next five years,” says Zhang. “We look forward to the continued fruitful collaboration with the Anvil group, leveraging their expertise to drive our program forward.”

Another workshop supported by the Anvil supercomputer was the 2024 Southeastern Center for Microscopy of Macromolecular Machines (SECM4) data processing workshop. The workshop focused on teaching researchers how to process data for single-particle cryogenic electron microscopy (SPA-cryo-EM) analysis. The workshop was developed and led by Dr. Nebojša (Nash) Bogdanović, a faculty member specializing in cryo-EM who co-manages the operations of the SECM4 cryo-EM service center, located at Florida State University. Attendees learned how to use HPC-based cryo-EM software such as Relion, CryoSPARC, and ML-based Topaz for tasks like preprocessing, particle picking, 2D and 3D reconstruction, classification, and model building.

Due to the nature of cryo-EM work and the workshop’s size, the instructors required a resource capable of providing large-scale computing power. They turned to Anvil for that support.

“We understood that the computational resources required for this work are very intense,” says Dr. Bogdanović. “So we needed 4 to 8 GPUs, 500 GB to 1 TB of RAM (or more), as well as a large SSD allocation. What we managed to do with Anvil was to use their implementation of CryoSPARC, a software we use readily in our field, and distribute it to the 12 participants in our workshop to demonstrate how each step is carried out.”

The Anvil team provided the SECM4 workshop with access to the supercomputer’s advanced GPUs and granted a special dispensation to reserve a block of GPUs for the three-day course.

“So we, thanks to the kindness of the Anvil team, were able to reserve up to 10 GPUs simultaneously,” adds Dr. Bogdanović, “guaranteeing that our participants could run jobs during the workshop. That worked out wonderfully, and we are very grateful Anvil was able to do this.”

Dr. Bogdanović was delighted with Anvil’s performance. To prepare for the workshop, he gained access to Anvil months in advance through a proposal-based, NSF-funded ACCESS program, ensuring the system would fit his needs. CryoSPARC was already installed and ran flawlessly, better than his experience with the software on other HPC systems. RELION was also available, but he needed a different version for the workshop. The Anvil team was on hand to help and guided him through their specifics of installation, and RELION worked perfectly when implemented. Dr. Bogdanović prepared all the results for the workshop projects in advance to determine what was most suitable for inclusion and to keep backups on hand in case of any hiccups. Fortunately, everything ran smoothly, and the workshop was a huge success. Participants found it so useful that five went on to apply for and receive their own Anvil allocations.

Student Support and Education

In its third year of operations, Anvil expanded its scope of student support by directly and indirectly supporting high school students in their computing and HPC development.

The first major push to engage younger students came in the summer of 2024. RCAC, utilizing Anvil resources and with support from the Anvil staff, hosted two summer camps aimed at high schoolers, with the hopes of giving them an introduction into the college experience by providing them the ability to earn college credit, explore potential majors and experience campus life.

CyberSafe Heroes: A Week of Cybersecurity Mastery, was the first of the two camps. It focused on cybersecurity best practices and career pathways. Students participated in encryption challenges, ethical hacking simulations, cybersecurity escape rooms, online safety workshops, and engaging career panels with cybersecurity professionals.

The second camp, Code Explorers: Coding and Environmental Discovery, focused on creating an immersive introduction to coding, connecting it to environmental science. Students were able to code with microcontrollers, conduct data analysis with Python, create environmentally themed games and more.

Another instance of high school student support came when Sarah Will, a senior at the Science and Mathematics Academy at Aberdeen High School (SMA) in Aberdeen, Maryland, completed her senior capstone project by conducting research utilizing the Anvil supercomputer. Will worked under the guidance of PhD student Anastasia Neuman, from the Chemical and Biomolecular Engineering Department at the University of Pennsylvania. The pair decided to expand on research previously conducted by Neuman, which looked into how confinement within nanoparticle packings affected the miscibility (the ability to be mixed at a molecular level to produce one homogeneous phase) of a bulk polymer blend. For this project, the two used Anvil to simulate the effects nanoparticle packings have on block copolymers (BCPs). The results of the new project were unexpected, but will help experimental researchers produce materials with specific BCP phase structures. This could lead to novel polymer properties (e.g., improved malleability or conductivity), which could lead to solutions for problems such as CO2 separation, rechargeable batteries, food packaging, and tissue engineering.

One thing that stood out for Neuman was Anvil’s accessibility and ease of use. Will was a first-time HPC user. She had no experience working within a terminal and was unfamiliar with HPC server environments. Thanks to Anvil’s Open OnDemand portal, Will was able to log into the cluster via a web browser, even on the high school computers, which don’t allow students to download any software.

“I think it’s easier for students because they are used to working with a web browser more than they are a terminal,” says Neuman. “So being able to access Anvil with Open OnDemand made it a lot more user-friendly and a great introduction to computational work. I’ve even recommended Anvil to my supervisor at UPenn, who teaches many classes that introduce computational work to undergraduates.”

Anvil student support also extended to undergraduates. Throughout its third year Anvil supported roughly 1,700 students in a national data science experiential learning and research program known as The Data Mine. The goal of The Data Mine is to foster faculty-industry partnerships and enable the adoption of cutting-edge technologies. The course introduces students of all levels and majors to concepts of data science and coding skills for research. The students then partner with outside companies for a year to work on real-world analytic problems. Anvil provided 1 million CPU hours for the program and allowed the students to manage extensive research datasets, thanks to the supercomputer’s large capacity.

Anvil also supported  nearly 60 students via the 2024 STARS summer program. STARS is an eight-week, on-site program offered by Purdue University’s College of Engineering. The program is designed to teach undergraduate students deep-tech skills in integrated circuit design, fabrication, packaging, and semiconductor device and materials characterization. The backbone of the program is Chipshub, the online platform for everything semiconductors. Chipshub is powered by nanoHUB, the first end-to-end platform for online scientific simulations.

nearly 60 students via the 2024 STARS summer program. STARS is an eight-week, on-site program offered by Purdue University’s College of Engineering. The program is designed to teach undergraduate students deep-tech skills in integrated circuit design, fabrication, packaging, and semiconductor device and materials characterization. The backbone of the program is Chipshub, the online platform for everything semiconductors. Chipshub is powered by nanoHUB, the first end-to-end platform for online scientific simulations.

“Chipshub extends nanoHUB’s success to deliver both open-source and commercial software that supports a semiconductor community through workforce development at scale,” says Gerhard Klimeck, Chipshub co-director, Elmore Professor of Electrical and Computer Engineering and Riley Director of the Center for Predictive Devices and Materials and the Network for Computational Nanotechnology.

Chipshub partnered with RCAC to leverage the power of the Anvil supercomputer. By taking advantage of the Anvil Composable Subsystem, Chipshub can deliver the power of HPC to hundreds of users at once and significantly cut down time spent waiting for results. This ability to compute at scale allows Chipshub to drive semiconductor workforce development throughout the nation without having to limit classroom size, which is precisely what the STARS program did.

“Chipshub proved itself as each member of the STARS cohort were concurrently running simulations, producing chip layouts, and running physical verification continuously for the final three weeks of STARS,” says Dr. Mark C Johnson, who led the STARS program. “Collectively, 12 teams of four to five students each produced a chip design that has been combined into a single layout and will be submitted in September, 2024 for fabrication.” During the program, Chipshub and Anvil powered 1,800 simulation sessions and 6,000 interactive hours.

The most direct and intensive undergraduate student support provided by Anvil was RCAC’s very own Anvil Research Experience for Undergraduates (REU) Summer 2024 program. The 2024 Anvil REU program saw eight students from across the nation gather at Purdue’s campus in West Lafayette, Indiana, for 11 weeks to learn about HPC and work on projects related to the operations of the Anvil supercomputer. Eight members of RCAC’s staff provided mentorship to the students throughout the summer, helping them to complete four separate Anvil-enhancing projects. The student participants of the program were:

- Jeffrey Winters, Computer Science and Engineering double major, University of California, Merced

- Alex Sieni, Computer Science and Statistics double major, University of North Carolina at Chapel Hill

- Richie Tan, Computer Science major, Purdue University

- Anjali Rajesh, Computer Science major, Rutgers University

- Nihar Kodkani, Computer Science and Math double major, Purdue University

- Selina Lin, Computer Science and Math double major, Purdue University

- Philip Wisniewski, Computer Science major, Purdue University

- Austin Lovell, Computer Science major, Purdue University

By summer’s end, these eight students made fantastic progress: they completed their projects, learned technical and people skills they will need when in the workforce, and gained an in-depth understanding of the world of HPC. In fact, since the conclusion of the 2024 Anvil REU program, six of the students have taken up student positions and continue their work at RCAC. Many have also gone on to present their work at national conferences, including the 2024 International Conference for High-Performance Computing, Networking, Storage, and Analysis (SC24) and the Global Open OnDemand 2025 Conference (GOOD 2025).

Industry Partnerships

Anvil’s third year of production saw an explosion of growth for its Industry Partnership program. This program allows industry users to utilize the Anvil supercomputer for their business needs, but at a fraction of the cost of private HPC companies. Examples of some of the current Industry Partnership users, as well as projects under discussion, include:

- MyRadar: high resolution weather prediction

- BlueWave AI Labs: AI/ML for nuclear plant operational, regulatory efficiency improvements

- Smart building technology company (Kubernetes GPU workloads)

- LLM-based tool for conversational assistance during emergencies

- AI-driven platform for airport power infrastructure management for electric aircraft

- Electromagnetic propulsion systems

- Generative AI for personalized content

- Medical research company working on blood test-based cancer detection

- Life sciences diagnostics company

- Technology company aimed at early detection of TBI and cognitive impairment

To learn more about the Industry Partnership program, please visit: https://www.rcac.purdue.edu/industry

Continuing Anvil’s success

The Anvil team is thrilled by all it accomplished in its third year, and is looking forward to driving discovery and innovation throughout its fourth year of operations and beyond. The team has multiple plans for the coming year, including targeted training and support, the development of a complete scalable and dynamic ecosystem of services, and increasing the conscientious use of AI for the advancement of science and technology.

“Anvil has established itself as a major HPC resource to the national research community,” says Preston Smith, Executive Director for the Rosen Center for Advanced Computing and co-PI on the Anvil project. “After three years in production, we are pleased with everything Anvil has enabled thus far, whether it be the science conducted on the machine or the training and education opportunities it has provided. Looking ahead to year four, our goal is to continue to innovate, helping expand the boundaries of scientific discovery, while still providing world-class support and education for researchers nationwide. With our inclusion as a resource for the NAIRR Pilot, we are looking forward to the new challenges in the upcoming year”

Anvil is funded under NSF award number 2005632. The Anvil executive team includes Carol Song (PI), Preston Smith (Co-PI), Erik Gough (Co-PI), and Arman Pazouki (Co-PI). Researchers may request access to Anvil via the ACCESS allocations process.

Written by: Jonathan Poole, poole43@purdue.edu