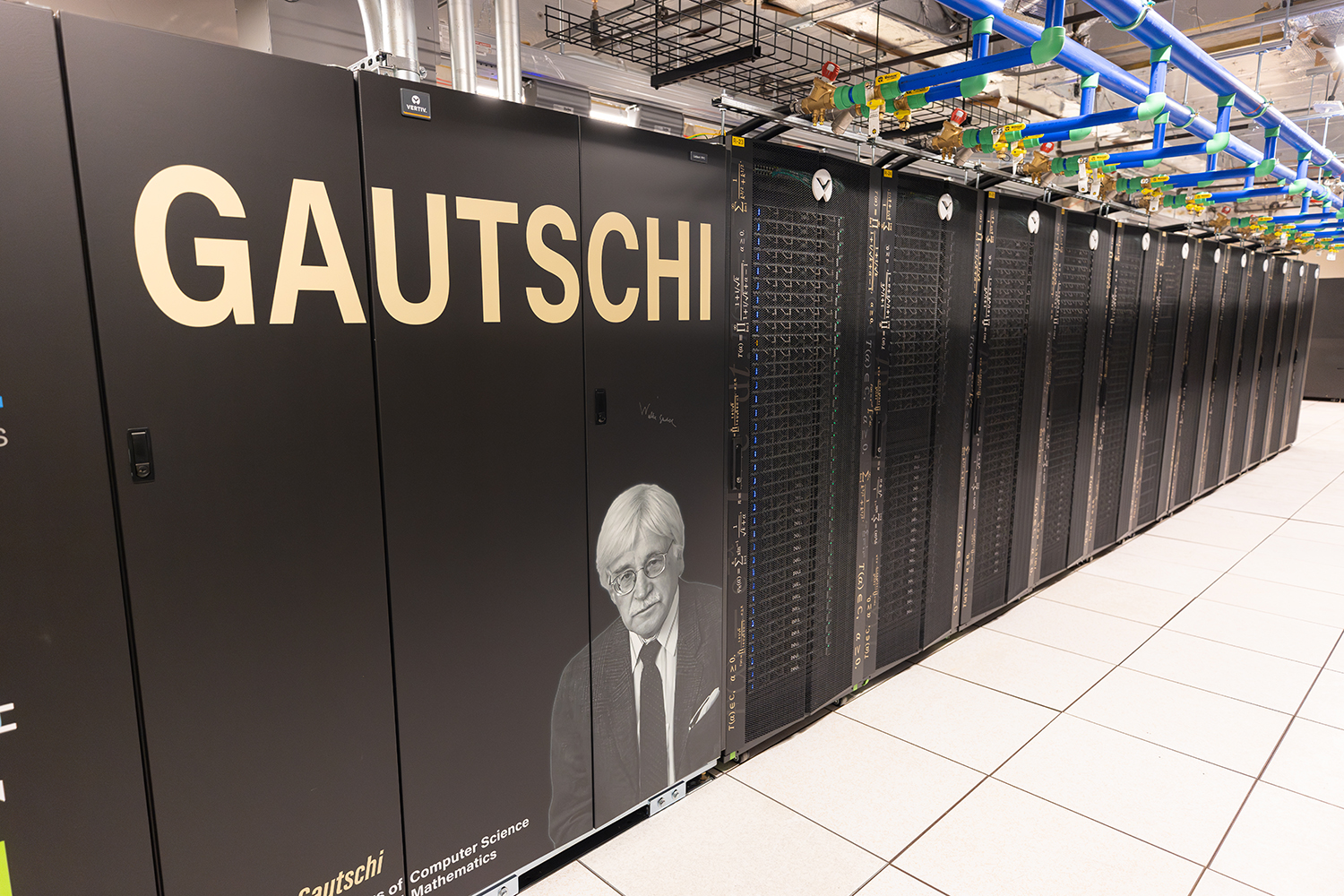

Gautschi-AI available for Purdue researchers

The highly anticipated Gautschi-AI system is now online and ready for use at Purdue University. This expansion of the new Gautschi supercomputer is designed to enhance artificial intelligence (AI) workflows and enable Purdue to lead the charge in AI research.

The Gautschi supercomputer  was deployed by Purdue IT’s Rosen Center for Advanced Computing (RCAC) in early October, with Phase 1 providing Purdue researchers with next-generation CPUs, a limited number of GPUs for shared access, and approximately 2.5 PetaFLOPS of performance. The Gautschi-AI partition is comprised of 20 Dell PowerEdge XE9680 compute nodes, each with Dual 56-Core Intel Xeon Platinum 8480+ CPUs, 8 Nvidia H100 SXM GPUs with 80 GB of RAM, and 8x non-blocking 400 Gbps NDR links. This massive acquisition of H100 GPUs will provide researchers with a whopping 10.7 PetaFLOPS of peak performance. Gautschi-AI is also designed to be expandable up to 32 nodes/256 GPUs, in anticipation of rising demand from AI researchers. Gautschi also features a high-speed all-flash parallel filesystem with 7PB of capacity, which will ensure that its CPUs and GPUs remain fed with data.

was deployed by Purdue IT’s Rosen Center for Advanced Computing (RCAC) in early October, with Phase 1 providing Purdue researchers with next-generation CPUs, a limited number of GPUs for shared access, and approximately 2.5 PetaFLOPS of performance. The Gautschi-AI partition is comprised of 20 Dell PowerEdge XE9680 compute nodes, each with Dual 56-Core Intel Xeon Platinum 8480+ CPUs, 8 Nvidia H100 SXM GPUs with 80 GB of RAM, and 8x non-blocking 400 Gbps NDR links. This massive acquisition of H100 GPUs will provide researchers with a whopping 10.7 PetaFLOPS of peak performance. Gautschi-AI is also designed to be expandable up to 32 nodes/256 GPUs, in anticipation of rising demand from AI researchers. Gautschi also features a high-speed all-flash parallel filesystem with 7PB of capacity, which will ensure that its CPUs and GPUs remain fed with data.

As the name suggests, Gautschi-AI was developed to enhance AI research at Purdue. Thanks to support from Purdue Computes and the Institute for Physical AI (IPAI), Gautschi-AI was built with state-of-the-art H100 GPUs, which utilize NVIDIA’s Hopper architecture and a Transformer Engine in order to provide training and speeds that are four times faster than previous generation models. The unique, 8-way connected configuration of the nodes means that a researcher can buy one GPU on Gautschi but leverage the tens of GPUs if that is what their job requires. This feature alone will save AI researchers both time and money.

Scientific fields are constantly evolving. As knowledge is expanded and new technology emerges, research methods are in a continual state of flux. AI-based workflows are one of the newest methodological advancements and have quickly been adopted by every field of scientific computing. From Large-Language Models (LLMs) and Convolutional Neural Networks (CNNs), to protein structure prediction, robotics, and computer vision, intensive AI workloads are becoming integral to research across the scientific spectrum. An AI cluster like Gautschi will ensure that Purdue researchers have the necessary tools to make new discoveries.

Why Gautschi?

The first question that comes to mind for most Purdue researchers is, “Why should I use Gautschi-AI?”, as Purdue already offers an advanced, GPU-based supercomputer with the Gilbreth cluster. The answer boils down to two things: 1) More powerful GPUs designed to address “capability” problems, and 2) a newly configured scheduler configuration optimized specifically to enable AI workflows.

Gilbreth is designed as an HPC computing resource specifically optimized for “throughput” applications, supporting general purpose, medium-scale, simulations as well as large numbers of smaller AI jobs, primarily AI inference or medium-scale simulation. Gilbreth’s resources are primarily comprised of the previous generation Nvidia “Ampere” GPUs, and allocated much like CPU-based community clusters, with purchases delivering dedicated numbers of GPUs of capacity for research labs. While Gilbreth is versatile and supports a broad spectrum of research, its allocation model limits the flexibility of AI researchers, making it difficult to scale workloads when needed or to burst in times of high demand, such as conference deadlines.

In contrast to Gilbreth, Gautschi-AI provides dense compute nodes, with 8 GPUs per node and a fully non-blocking network interconnect. This enables Purdue researchers to train and use large AI models and solve bigger problems than ever before, while significantly reducing model training time and enabling a much faster time to science.

In response to focus group discussions with leading AI researchers, Gautschi features a new Slurm scheduler configuration optimized for the unique needs of AI workflows.

Those accustomed to RCAC’s Community Cluster systems often run jobs via Standby mode, where they can take advantage of idle resources on the cluster as long as they are willing to wait to run their jobs until the resources become available. The caveat is that these jobs are limited to 4 hours. So if a researcher knows their job will require longer than four hours to complete, Standby is effectively not an option.

Many traditional scientific and engineering applications cannot be halted and restarted easily or quickly, so once a job begins, it must run until completion. However, for AI workloads, jobs can be interrupted and restarted with no issue. This is due to the characteristics of the specific jobs and applications. With traditional applications, there are no “save points” along the way. If the job is interrupted for any reason, it has to start over from the beginning, meaning that if a compute job has been running for 10 days and gets interrupted, those 10 days are wasted. But with AI workloads, such as training models, the model continually accounts for the new data it has received or created and saves each new iteration in a file. If the job is interrupted, it does not need to start from the beginning, but can simply load the most recent file and immediately pick up where it left off. To take advantage of this facet of AI workloads, the Slurm scheduler for Gautschi-AI will work differently than RCAC’s other systems.

For Gautschi-AI, Slurm will have a low-priority, preemptible Standby model for the GPUs. Since AI workloads tolerate interruption, there is no need for a limit to job sizes, meaning that researchers can run large jobs (longer than four hours) outside their dedicated slot at a much lower cost without negatively impacting their jobs or others’. Whether it be five minutes, five hours, or five days, jobs executed via the preemptible mode on Gautschi-AI will continue to run unless a high-priority job needs the space. If interrupted, the Standby job will be re-listed and will wait until there are resources for it to be launched again.

Gautschi Allocation Model

In order to reflect the unique Slurm features and confer more flexibility to researchers, Gautschi-AI will feature an updated allocation model.

Gautschi CPU and Gautschi Al will feature different allocation models:

-

Gautschi CPU—Faculty purchase a pool of CPUs (partial nodes/shares) for the life of the system, the same as with other Community Cluster systems. Standby mode continues to exist on CPU partition in a manner like other Community Cluster systems.

-

Gautschi Al—Faculty purchase a 5 GPU-year package (e.g. 1 GPU for 5 years). Workflows can scale up to more GPUs at the same priority to benefit from the NVLink and fully non-blocking network interconnect; in those circumstances, the charging rate of GPU hours will be accelerated similarly. Standby mode usage will be charged, but at a much slower rate than high-priority usage.

Although Gatschi CPU and Gautschi AI will feature different allocation models, both partitions will respond to the same scheduler. This allows users to compose efficient workflows that utilize both the CPU and GPU portions. And with two allocation models, researchers can perform CPU-bound work without consuming GPU hours—a boon for AI workflows, as not everything will require the use of GPUs. To make accounting more user-friendly, a single PI account will be used for all partitions.

As with all Purdue Community Cluster systems, RCAC offers world-class support for its Gautschi-AI users. Dedicated AI experts are available to help users optimize workflows and understand how to run their jobs quickly and efficiently on this new system. Anyone concerned that their jobs may not run well and therefore may waste compute time can reach out to RCAC for assistance.

Gautschi-AI is the next giant leap for Purdue, launching the university forward in AI research and capabilities. The specialized Slurm features and new allocation model are unique to the Gautschi-AI system, driven by the specialized needs of AI workflows. The other Community Cluster systems will not be affected by these changes. The Gautschi system is now available for purchase. If you have any questions, please contact rcac-help@purdue.edu.

Early User Program

RCAC is seeking to enroll select participants in an Early User Program (EUP) for the Gautschi-AI system. The EUP will provide users with access to Gautschi free of charge for a period of time, with the intent of helping RCAC identify and remedy any potential issues with the new system. EUP’s allow RCAC to ensure the highest possible quality of service for researchers at Purdue. Spots to the program are limited and will be given on a discretionary basis. If you or someone you know would like to participate in the Gautschi-AI EUP, please email rcac-help@purdue.edu with the subject line “Gautschi-AI Early User Program.”

Written by: Jonathan Poole, poole43@purdue.edu